Understanding what language tasks you are solving

Your very first step towards building language technology applications

Welcome, anon! Another week, another set of exciting developments in language technology applications

The visual language model Flamingo🦩 released by DeepMind shows that the large language models are getting ridiculously good at conversating with humans about images (amongst other things). Some of my favorite examples:

Despite the surprising generalization of 🦩there are several limitations including hallucinations, mediocre spatial arrangement understanding, among several others described by the lead author of 🦩in his Twitter 🧵

We will get to the point where we could build similar language task prototypes, albeit with a lot less computation and data. But before we do that, we need to understand what kind of language technology task we are trying to solve.

There exists a great toolbox of language technology solutions and it doesn’t make sense to reinvent the wheel >90% of the times. BowTiedBear put it quite nicely

Let’s get into this!

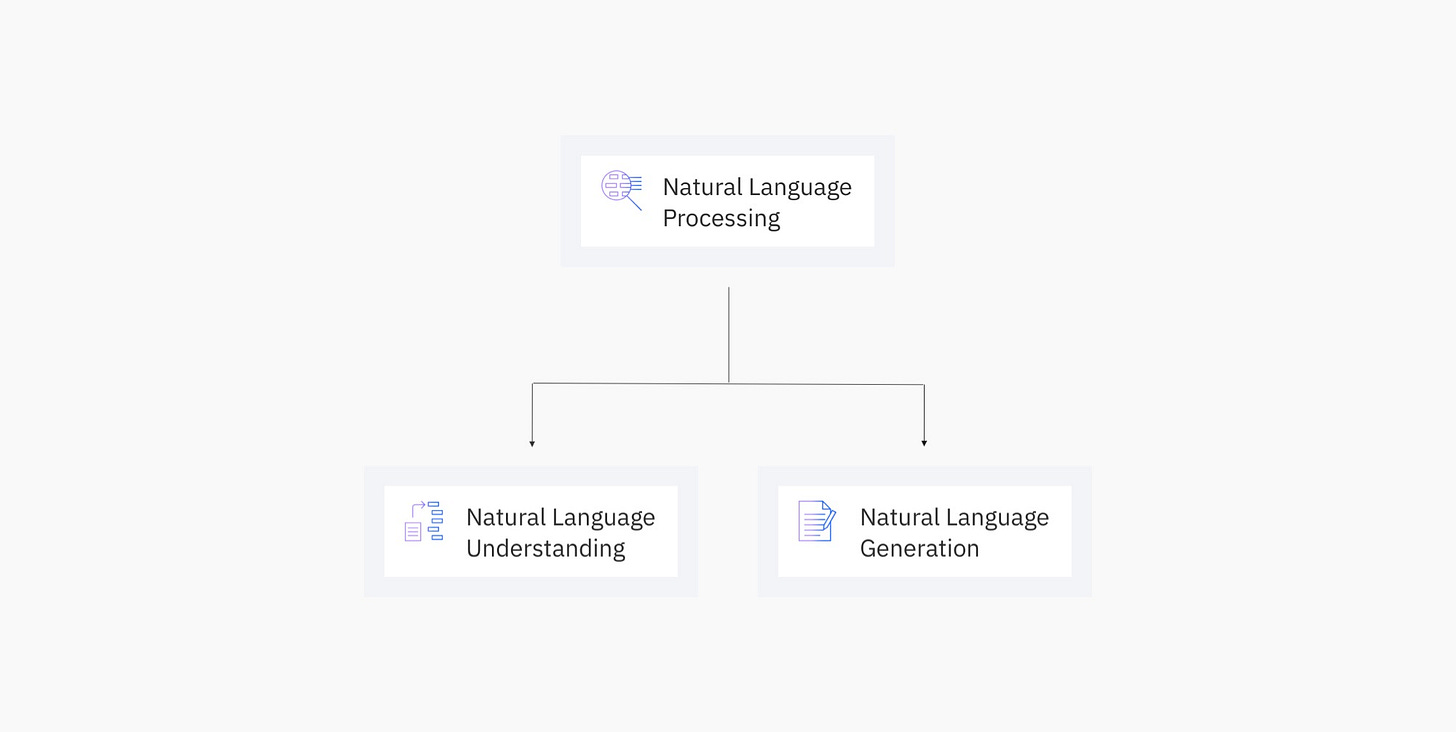

Natural Language Understanding (NLU) vs

Natural Language Generation (NLG)

Before we jump into individual tasks, we need to distinguish natural language understanding tasks (abbreviated as NLU) vs natural language generation tasks (abbreviated as NLG). It is not that hard to distinguish them.

If your end goal is to generate another text (for example translation, summarization, etc) then the task falls under the NLG category. If you want to classify text with a certain class (for example sentiment analysis, topic classification) or classify certain words in the sentence with a label (for example label words like Los Angeles and New York City as Cities in the sentence “I am flying from Los Angeles to New York City“) then the task falls under the NLU category.

There are a few tasks where a line between understanding and generation becomes blurred which we discuss in a later section. For now, let’s dive deeper into each category.

Typical NLU tasks

Sentiment Analysis, Document Classification, and Topic Classification (broadly multi-class classification)

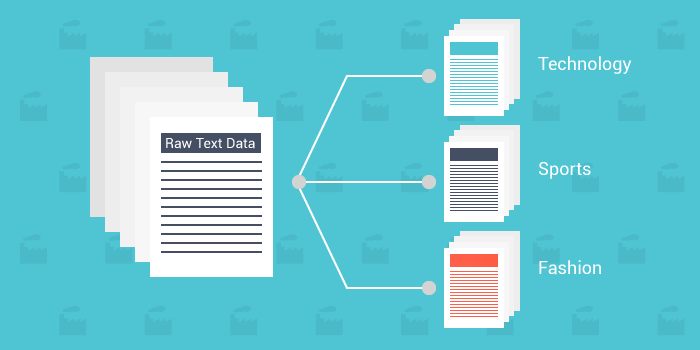

Your input is a sentence, a paragraph, or a whole document and you would like to assign one label to the entire input. Some representative examples:

Predict the sentiment (positive/negative) of the movie reviews.

Predict the topic of the document (for example technology or sports article).

Predict whether an email is a spam or not.

There are some cases where the same document can have multiple output classes at once. We refer to these examples as multi-label examples (in contrast to multi-class when you have a single label for a document) For example, an article about NFL putting sensors inside football could be both labeled as a sports and technology article. But since these examples are not very frequent, for simplicity we assume that these tasks are multi-class.

Named Entity Recognition, Slot Labeling (broadly span detection and classification)

Your input is again a sentence, a paragraph, or a whole document and now you would like to find a span (a slice in the text input) that corresponds to the desired class. Named Entity Recognition (NER) is a prototypical example of such task with an example output below.

There are countless applications of NER. Extracting entities from financial and medical documents, extracting items and their bills from bill receipts, and so on.

Many other applications that require finding and classifying spans in the sentence can be recase as an NER task. One such example is slot labeling, a task that is used to extract entities from user utterances related that are related to the intent (example in utterance “I would like to book a flight to Los Angeles“ extract “Los Angeles“ as arrival city).

Typical NLG tasks

Broadly, whenever you need to generate a text it falls under the natural language generation (NLG) category. It can be anything from classical applications like machine translation, text summarization to tasks like marketing copy generation and resume rewriting.

People typically separate out open-ended text generation from other text generation tasks. As the name says, open-ended text generation tasks like poetry generation and story telling have infinite number of possibilities on what kind of text you could generate. Which is unlike machine translation or text summarization where the generated text needs to stay faithful to your source.

When the line between NLU and NLG becomes blurred

Chatbot is a pretty typical example that can be both approached either as NLU or NLG task, or potentially combine both together.

As an NLU task, you classify the user utterances with the intent label (remember multi-class classification from above? 🙃 ) and then reply back with a fixed set of responses.

Example if user says “withdraw a cash“ you classify it with a special intent “money_withdraw“ and ask follow-up questions like “What is the amount?” using rules. The upside is that the response behaviour of the model stays consistent. The downside is that this system won’t be able to handle user questions that are outside those intents. Which makes these chatbots feels frustraiting sometimes.

As an NLG task, you simply ask the model to generate response given the text. You are no longer restricted to fixed set of questions the model can reply to (as long as it seen similar conversations somewhere in the data), but you are potentially opening a pandora box of undesirable responses.

Something keep in mind: There are pros and cons to casting the chatbot application as either understanding or generation tasks. There is never a clear answer on which one to use, but just be aware of limitations each framework. We will likely come back to this tradeoff in one of the later language application posts.

Voila! Hope you learned more about types of language tasks. We will use this framework to start buiding our first language application in the next post.

I would really appreciate if you could recommend this substack to your friends who are interested in this topic!

See you in a week or two!

![Everyone's AI] Explore AI Model #11 Is there a chatbot that goes beyond the GPT-3? BlenderBot 2.0 | by AI Network | AI Network | Medium Everyone's AI] Explore AI Model #11 Is there a chatbot that goes beyond the GPT-3? BlenderBot 2.0 | by AI Network | AI Network | Medium](https://substackcdn.com/image/fetch/$s_!hudr!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F7dcee1b9-6c06-4b5d-8e6a-df29b52aa4b0_1400x962.png)

Explanations are really clear, thank you