Parameter-efficient transfer learning for NLP

Your guide to the most efficient and the most effective transfer learning strategy in the GPT-3 era

Table of Contents

Introduction

Background: Transfer learning

Parameter efficient transfer learning

Next Steps

Introduction

We are currently living through a paradigm shift in natural language technology. As we scale the size of the pre-trained models (BERT, GPT-3, etc) we see a clear trend of improved performance on the downstream tasks (actual tasks we would like to solve like document classification, question answering, etc). Over the last 5 years, the size of the state of the art language models grew from ~94 million trainable parameters to 540 billion trainable parameters per task.

That is almost a whooping 6000x increase in the parameter size over the course of 5 years! Of course, the increase in parameters is not easy and is not cheap. It also becomes extremely expensive to copy the model and tune it to each downstream task independently. This motivated the prompting approach for GPT-3 where you write down very few examples in the input which GPT-3 uses as a context to make predictions with new examples.

While prompting is easy to use, there are several limitations to it.

It doesn't scale with moderate to large dataset sizes.

It is sensitive to the design of the prompt.

Fine tuning lets us get around these problems.

Transfer Learning for NLP tasks

We have a downstream task for which we would like to build an accurate NLP classifier. Let's take a document classification task which is one of the most widely used commercial NLP applications. We take a public document classification dataset BBC news with the goal of classifying each BBC article into the category like sports, finance, politics, etc. You can find many other representative downstream tasks in the glue tasks.

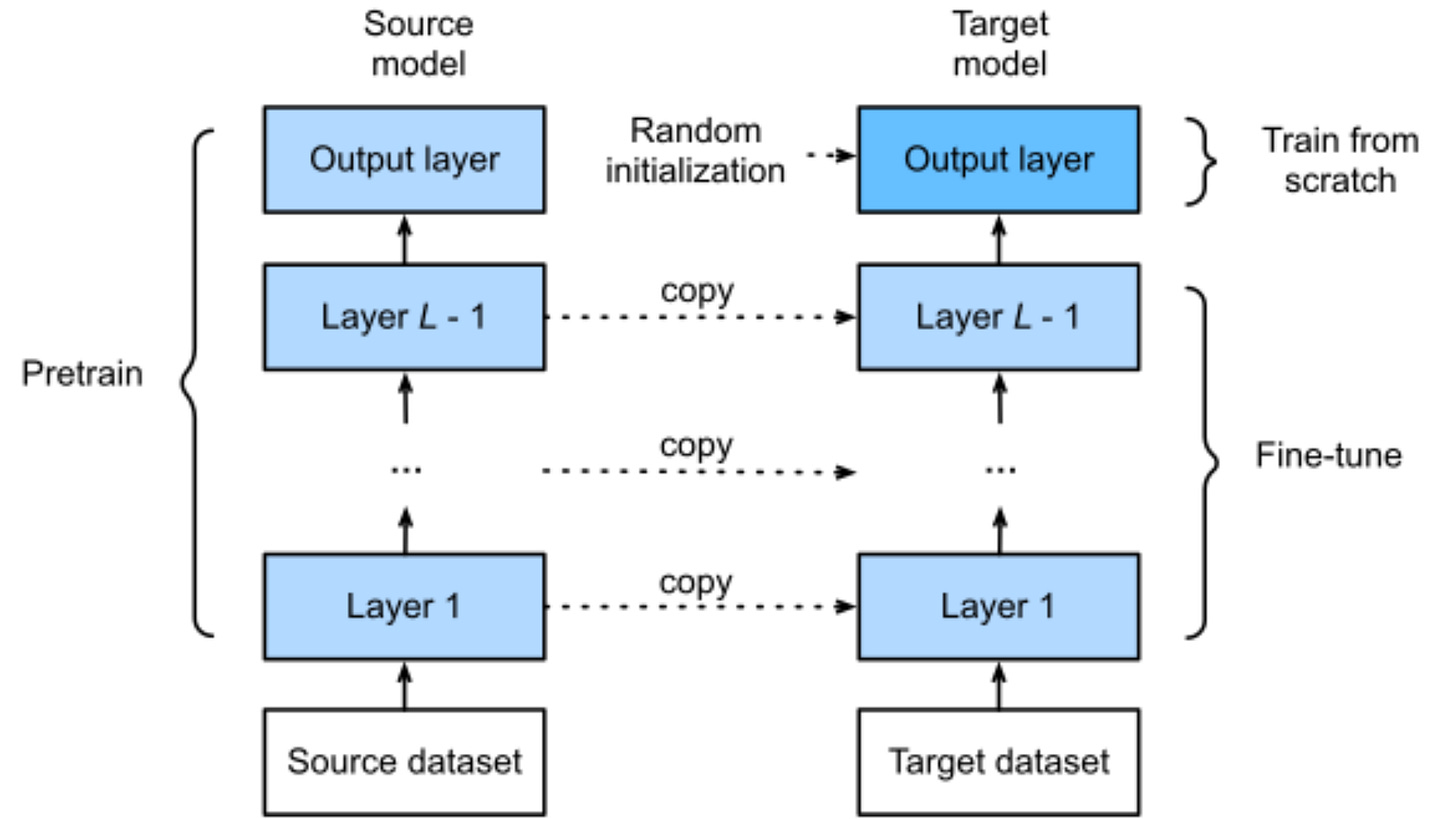

We start with an accurate pre trained language model such as RoBERTa that has accumulated a general knowledge about the language through filling out a randomly masked word on a large corpus of web text. The model has a general knowledge of language but still has no clue about the task we want to solve. That's why we need a few extra steps of training on that downstream task also known as fine tuning.

Fine tuning involves copying the weights of the original language model and updating them in a way such that the model maximizes its performance on the downstream task. All the trainable parameters from the original network get copied except for the output layer. The output layer is initialized randomly to predict one of the N output classes.

Mathematically these updates are expressed using gradient descent. Move the parameters W towards the point where the loss function L (think of loss as a differentiable version of negative accuracy) that depends on input X and correct label Y is minimal.

Simply think of rolling down the hill towards the point of minimum loss or equivalently maximum accuracy.

Code

Thanks to low-code libraries HuggingFace Transformers and free GPU in Google Colab it is simple to express full fine tuning process in a few lines of code and train the state of the art model on BBC news document classification for free within 5-10 minutes.

The same code is also in an interactive Colab notebook (double check and make sure you have GPU as your run-time). Then select Run Time -> Run All. Thanks to BowTiedCelt for testing it. Make sure to check out his substack.

# STEP 1

# install huggingface transformers library

!pip install transformers

# install datasets library that hosts bunch of NLP datasets

!pip install datasets

# STEP 2

# load the BBC news document classification dataset

from datasets import load_dataset

dataset = load_dataset("SetFit/bbc-news")

# STEP 3

# pre-process the dataset by tokenizing it

from transformers import AutoTokenizer

# load the tokenizer

tokenizer = AutoTokenizer.from_pretrained("roberta-base")

# tokenization function

def tokenize_function(examples):

return tokenizer(examples["text"], padding="max_length", truncation=True)

# tokenized datasets

tokenized_datasets = dataset.map(tokenize_function, batched=True)

# shuffle the training and evaluation datasets

train_dataset = tokenized_datasets["train"].shuffle(seed=42)

eval_dataset = tokenized_datasets["test"].shuffle(seed=42)

# STEP 4

# check the number of classes in the dataset

import numpy as np

num_classes = np.max(np.array(tokenized_datasets["train"]["label"])) + 1

print (f"Number of classes {num_classes}")

# Number of classes 5

# id2label that maps the python index to the label string

id2label = {}

for train_datapoint in tokenized_datasets["train"]:

id = train_datapoint["label"]

label_text = train_datapoint["label_text"]

id2label[id] = label_text

print (id2label)

# {0: 'tech', 1: 'business', 2: 'sport', 3: 'entertainment', 4: 'politics'}

# STEP 5

# load the RoBERTa base pre trained language model

from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification.from_pretrained("roberta-base", num_labels=num_classes)

# STEP 6

# load the default training hyperparameters

from transformers import TrainingArguments

training_args = TrainingArguments(output_dir="bbc_news_trainer", evaluation_strategy="epoch")

# STEP 7

# load the accuracy metric and implement the compute accuracy metric function

from datasets import load_metric

metric = load_metric("accuracy")

def compute_metrics(eval_pred):

"""

Given the logits (unnormalized probabilities) and labels calculate the accuracy

"""

logits, labels = eval_pred

predictions = np.argmax(logits, axis=-1)

return metric.compute(predictions=predictions, references=labels)

# STEP 8

# Load the Trainer class that will train and evaluate the model

from transformers import Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

compute_metrics=compute_metrics,

)

# STEP 9

# run the training

trainer.train()After running the Colab Notebook yourself you should see very high accuracy ~98% on the validation set

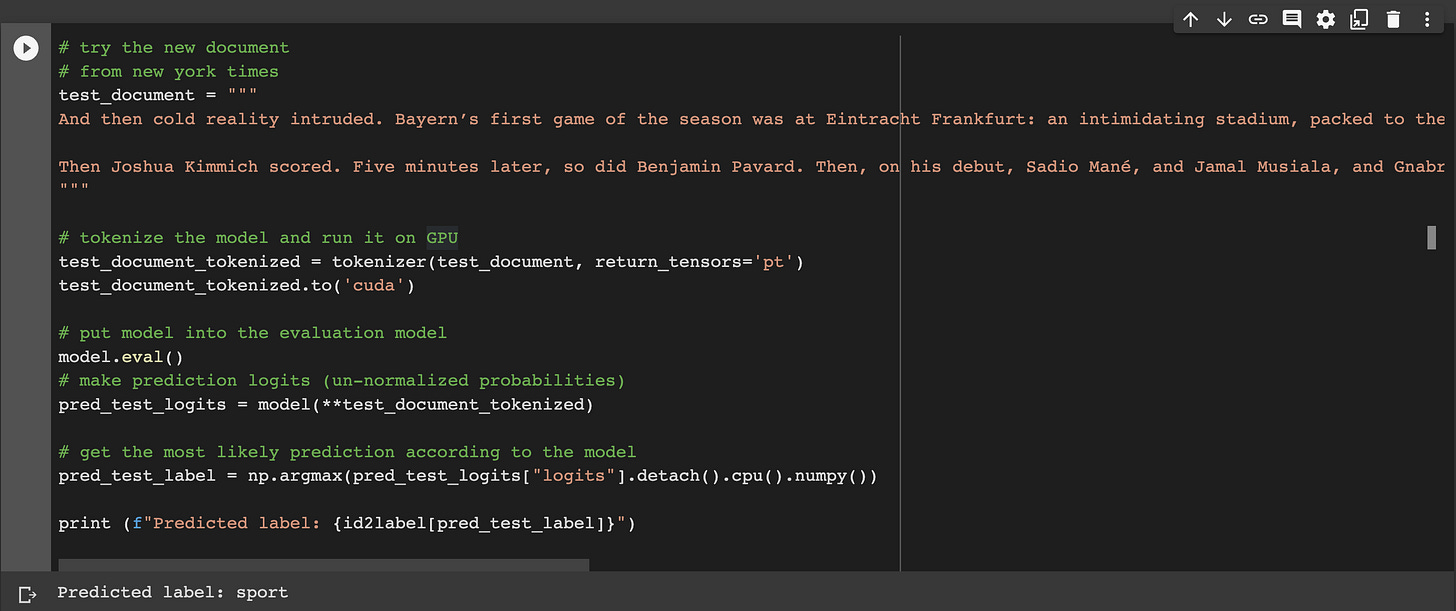

You can try using the fine tuned model on a recent New York Times article about the Bayern Munich soccer team

# try the new document

# from new york times

test_document = """

And then cold reality intruded. Bayern’s first game of the season was at Eintracht Frankfurt: an intimidating stadium, packed to the rafters, cheering on a team that had won the Europa League only a few months earlier. It was no gentle start. Not for the first five minutes, anyway.

Then Joshua Kimmich scored. Five minutes later, so did Benjamin Pavard. Then, on his debut, Sadio Mané, and Jamal Musiala, and Gnabry himself, and now the Bundesliga season was precisely 43 minutes old, and all of the hope had been extinguished and all of the what ifs had been answered. Just like that, for another year, it was over.

"""

# tokenize the model and run it on GPU

test_document_tokenized = tokenizer(test_document, return_tensors='pt')

test_document_tokenized.to('cuda')

# put model into the evaluation model

model.eval()

# make prediction logits (un-normalized probabilities)

pred_test_logits = model(**test_document_tokenized)

# get the most likely prediction according to the model

pred_test_label = np.argmax(pred_test_logits["logits"].detach().cpu().numpy())

print (f"Predicted label: {id2label[pred_test_label]}")Unsurprisingly the prediction is correct!!!

Downsides

There is one downside to fine tuning. Fine tuning gets very expensive in the current largest pre trained models (with >20 billion parameters). It is not only a matter of storage but the hardware that is necessary to have to fit such large pre trained models. We can go around this limitation with a parameter efficient fine tuning approach that trains only a few parameters while achieving comparable results to the full fine tuning approach.

Efficient Transfer Learning for NLP

The goal is to achieve comparable results to the original fine tuning approach while having as much of the original network remain fixed. I will highlight several key methods to accomplish parameter efficient transfer.

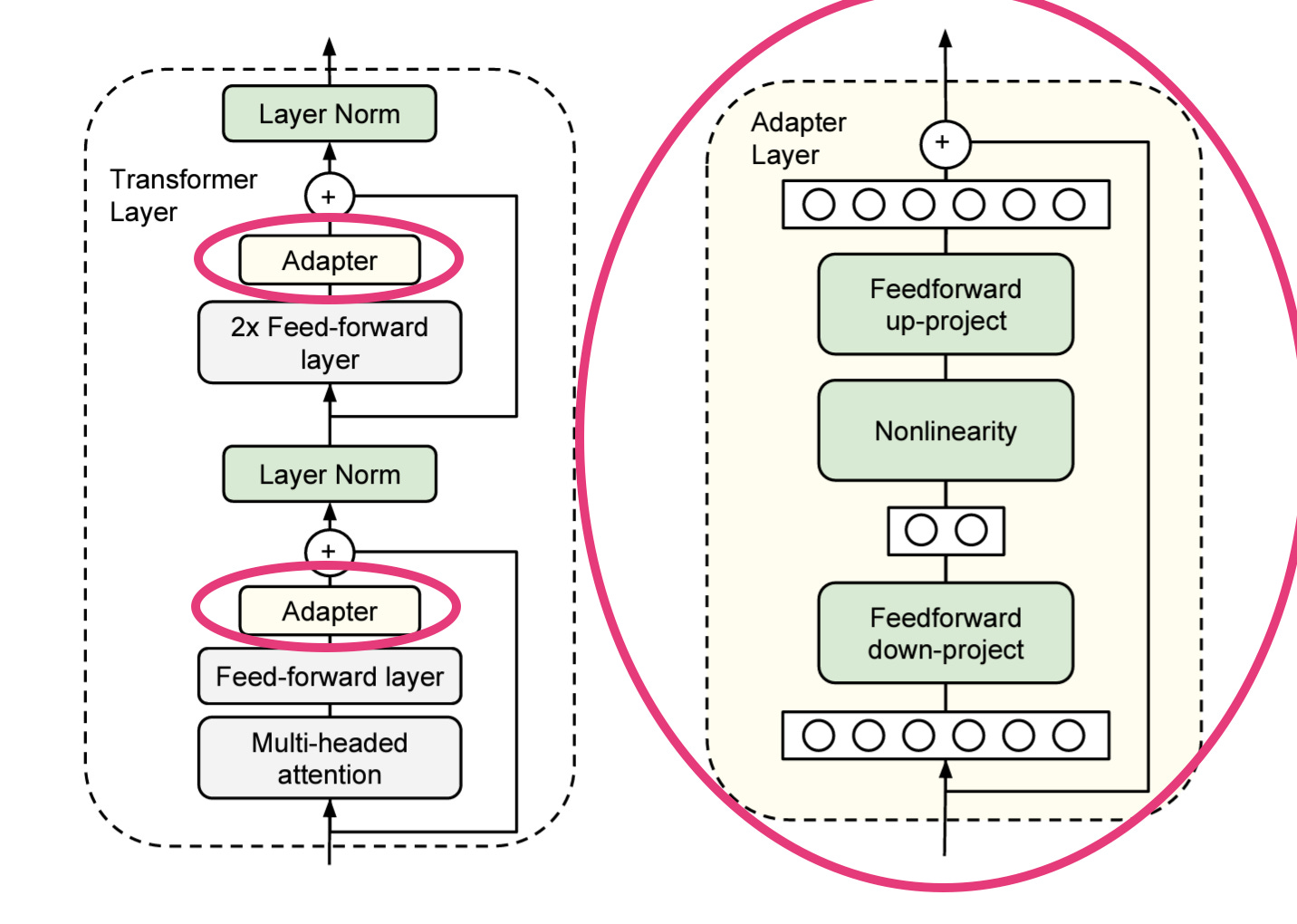

Adapter modules

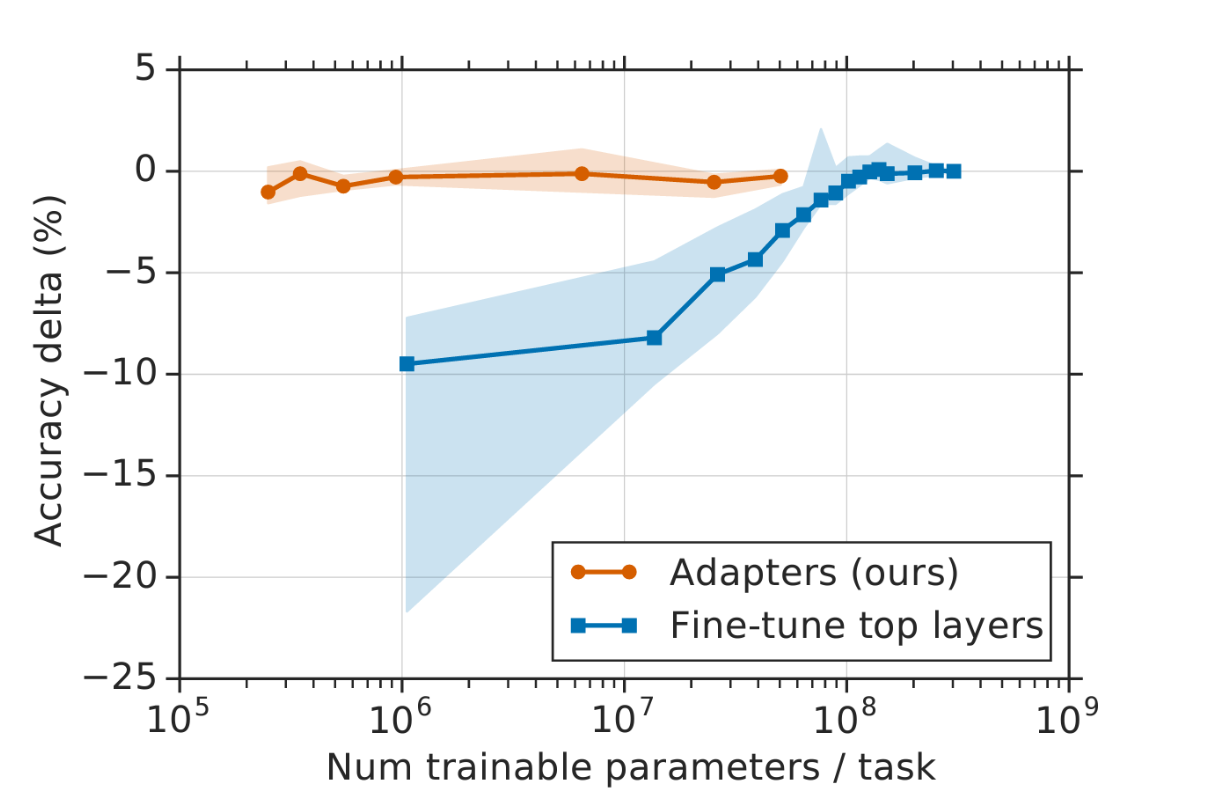

Using adapter modules involves adding a small number of parameters to the pretrained models. During training, we only update a few parameters inside adapter modules and update the output layer. The remaining parameters of the original network remain fixed.

Adapter modules are typically added to each Transformer layer in the pre trained language models. Despite having more than 12 layers in a typical Transformer model, adapter modules add a negligible number of parameters per task. Around 0.5 - 8% of the parameters of original deep neural networks end up being trained.

In order to accomplish strong results, the authors of the adapter modules paper highlighted the importance of using a near-identity initialization inside the adapter modules. This makes sure that the original network is minimally affected when the fine tuning starts.

Adapters attain competitive performance to the full fine tuning transfer learning procedure. Due to the great results, the adapter modules paper quickly caught up the attention of NLP practitioners. The original adapter modules paper has more than 500 citations in the last 3 years.

Many papers came out that extend and demonstrate the adapter's effectiveness.

I would like to highlight the AdapterFusion paper, which extends the adapter modules to the multi task learning. Multi task learning studies various approaches that enable a single deep neural network to perform multiple tasks. The AdapterFusion layer adds several independent adapter modules per each task and enables sharing of the information between adapters. The information sharing between adapter modules helps the model get stronger for each individual task.

Not only do adapters attain a great performance, but they are also easy to implement. This adapter modules repo contains a bunch of pre-trained adapter modules that can be downloaded and applied to your task right away. I will show a hands-on code example in the follow-up post!

Bias tuning

Bias tuning (BitFit) is another straightforward parameter efficient tuning approach. Only bias parameters inside the model are updated while the rest of the original network remain fixed. Biases are the vector parameters that are added to the output of each layer of the transformer layer. These biases simply shift output activations after applying matrix multiplication.

Biases correspond to a small fraction of the network (only 0.09% of parameters of the full network). Bias tuning requires even fewer parameters to train compared to adapter modules and doesn't require adding new layers to the network. Bias tuning works surprisingly well on many classification tasks on par with full fine tuning and parameter efficient methods like adapters.

There are two downsides of bias tuning:

First, the authors empirically find that bias tuning doesn't scale well with the dataset size. I.e. as we increase the size of the training dataset, the full fine tuning starts performing considerably better (>5% according to the plot in the paper) than bias tuning

Second, bias tuning is not extensible as adapter modules.

Despite all of that, bias tuning deserves attention and is the easiest parameter-efficient transfer learning approach to implement and try.

Low Rank Adaptation

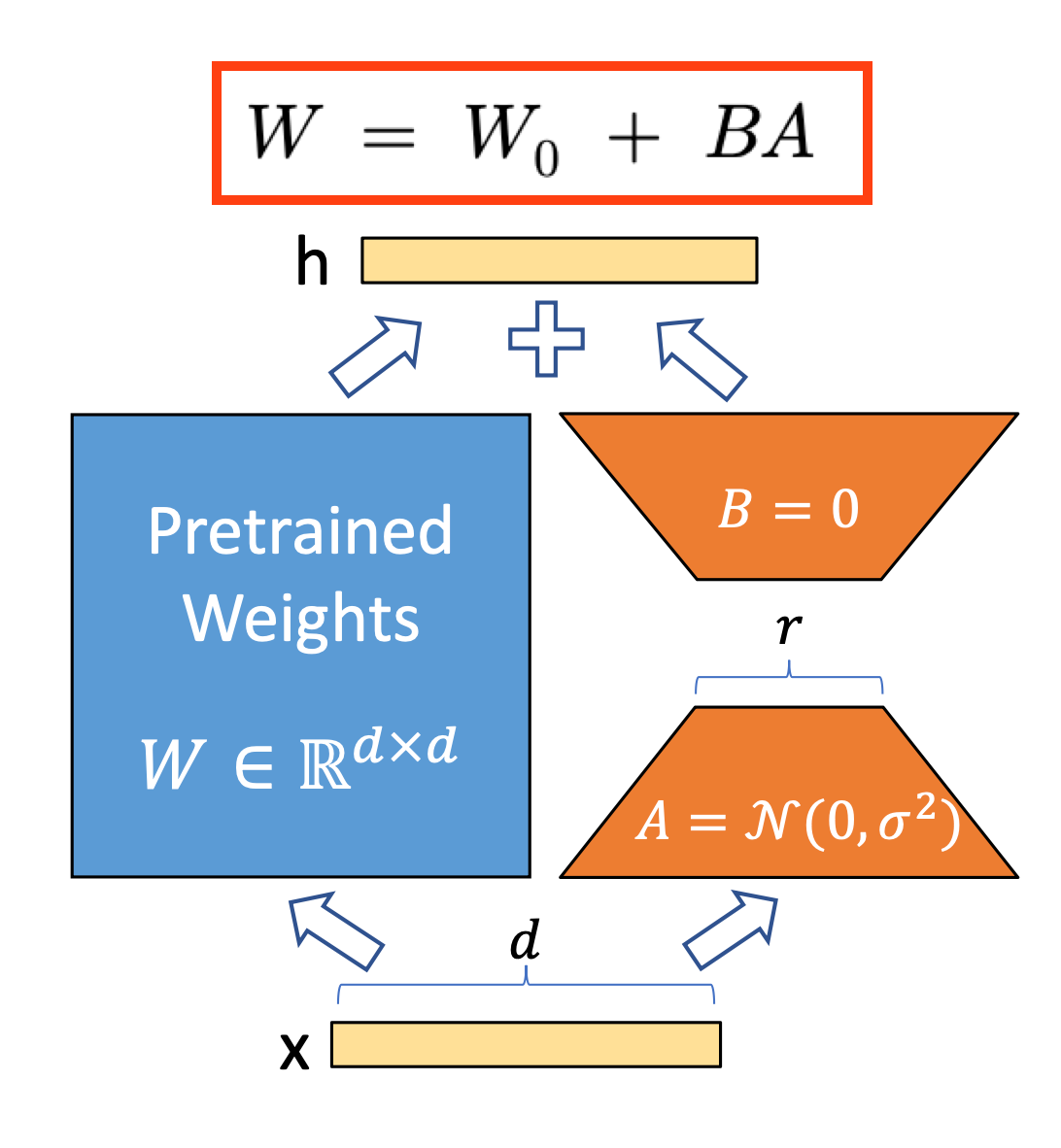

A recent Microsoft paper LoRA proposes parameter efficient transfer learning approach with strong results on GPT-3 175 billion parameter model. Their idea is quite simple: they freeze the parameters of the original GPT-3 and add a trainable rank decomposition matrix into each layer of the Transformer. Let's double down on that sentence.

The weight matrices in GPT-3 are full-rank. In the context of this work, the authors use the term full-rank to imply that both input and output dimensions d of the weight matrix are very high. For GPT-3 in particular the rank of the matrix can be as high as d = 12,228 -> 150 million parameters in one matrix. That is A LOT OF parameters for one matrix.

Thanks to the matrix multiplication properties we can express any full rank matrix d x d as a product of matrices with rank d x r and r x d where r is significantly smaller than d. That's what the authors of LoRA use in order to achieve parameter efficient transfer for GPT-3.

They simply create new matrices B and A with rank d x r. They use a very small value of r = 1 or 2 leading to only a few parameters (total 12,228 * 2 = 24,456 when r = 1) added to GPT-3. Most importantly, they freeze the original parameters of GPT-3 and only update a newly introduced low-rank matrices B and A per each Transformer layer.

The results of the proposed LoRA are very strong. LoRA reduces the number of trainable parameters by 10,000 times (from 350 GB to 35 GB) and the GPU memory requirement by 3 times. On top of incredible parameter savings, LoRA slightly outperforms adapter modules and BitFit.

With strong results and incredible parameter savings, LoRA is my current top choice for parameter efficient transfer learning for nlp. I will show the hands on implementation of it in the Colab Notebook in the next post.

Next Steps

Congrats, you made it through the most hands-on and technical post so far on this substack!

Hope it all made sense. I recommend you check out this list of references on parameter efficient transfer learning. If you have questions, feel free to DM me on twitter!

In the follow-up posts, we will dive deeper into all three parameter efficient transfer methods and implement them. See you soon!

I would really appreciate it if you could recommend this substack to your friends who are interested in this topic!

I REALLY enjoyed reading and am looking forward to trying these out in the future!