How to win at the NLP game (Part 1)

Or how to go from amateur to great!

Hello everyone, hal here.

Despite another round of wild claims about AI, real progress is being made every day toward building real AI systems used and valued by customers every day.

We are blessed that we live in the digital age, where we can quickly consume, learn and share information with others. The internet is filled with blog posts introducing natural language processing concepts, GitHub repositories with the latest technology, and arXiv papers with bleeding-edge information on language technology.

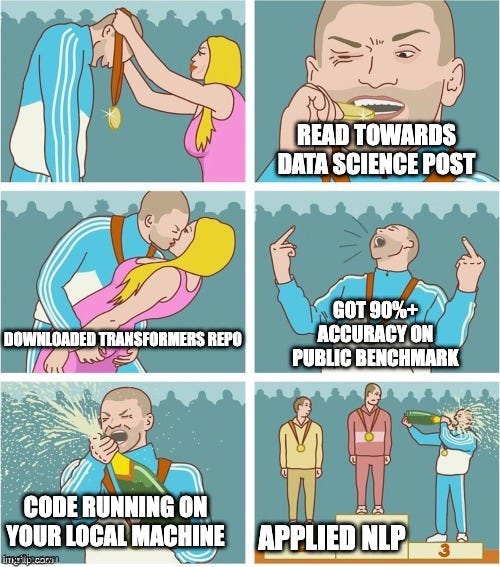

Make no mistake, there hasn’t been a better time to learn and try out language technology, for free. But no matter how much we consume of that material, it won’t get us to the point of mastery of this technology. Building practical language applications is more than just taking a state-of-the-art approach, blindly applying it to some dataset collected by others, getting 90+% accuracy, and declaring it as a win.

In fact, once you deploy your solution, you stumble upon the problems that you never expected. Silent failures of your model start to creep in. Customers don’t even get to see the predictions because of the pre-processing error. The calibration of the model is wildly off.

What to fix in this situation?

Debugging and understanding errors in machine learning is much harder compared to traditional software development. The good thing is, as long as you get your core development stack right it would be significantly easier to identify and fix those issues.

Now let us dive into the language application development stack.